Perception of Rock Bolt – Detection & 6-DoF Pose Estimation for Mining Automation

Organization: ABB Robotics, Umeå University, Sweden

Timeline: Mar 2025 – Jun 2025

· RGB-D Perception · YOLOv8 · Mask R-CNN · PointNet++ · ROS2 · Mining Automation

Problem Context

In underground mining environments, manual handling of rock bolts is unsafe and inefficient due to dust, cluttered scenes, partial occlusion, and poor depth quality. Enabling autonomous robotic grasping requires robust detection and accurate 6-DoF pose estimation of cylindrical rock bolts despite these adverse conditions.

The objective of this project was to develop a robust perception pipeline capable of operating on noisy RGB-D data from conveyor systems and supporting autonomous grasp planning for industrial mining robots.

System Overview

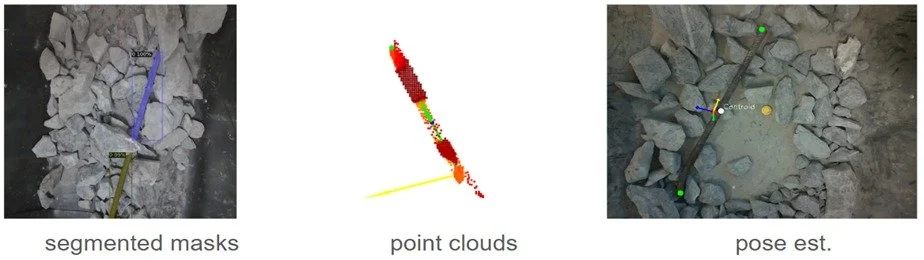

The system processes RGB-D input through object detection and instance segmentation to isolate individual rock bolts in cluttered scenes. Segmented regions are projected into 3D space to generate object-level point clouds, from which a PointNet++-based model estimates the 6-DoF pose.

The perception output is integrated into ROS2 and validated through grasp feasibility checks using an ABB industrial arm simulation in RViz, enabling perception-to-action verification.

My Role & Responsibilities

Role: Vision and perception pipeline lead

Designed the overall perception architecture combining 2D vision and 3D point-cloud processing.

Trained and evaluated Mask R-CNN on 1,000+ RGB-D images for robust segmentation under occlusion.

Integrated PointNet++ for direct 6-DoF pose regression from partial point clouds.

Deployed the pipeline in ROS2 and validated perception-to-action flow via simulated grasp planning.

Key Technical Decisions

Two parallel perception pipelines were designed and evaluated to balance robustness, accuracy, and deployability under mining conditions:

YOLOv8 + PCA-based Pose Estimation:

A lightweight pipeline using object detection followed by PCA on extracted point clouds to estimate bolt orientation. While computationally efficient, this approach relied on geometric assumptions and indirect mathematical inference, which reduced pose accuracy under occlusion and noisy depth data.Mask R-CNN + PointNet++ Pipeline:

An instance segmentation–driven pipeline that isolated individual rock bolts before point-cloud extraction. PointNet++ was used to regress the full 6-DoF pose directly from partial point clouds, resulting in significantly improved robustness under clutter, partial visibility, and uneven depth quality.

Comparative evaluation showed that Mask R-CNN combined with PointNet++ consistently outperformed the PCA-based approach, particularly in occluded scenes, due to more reliable object isolation and direct learning-based pose regression.

Results & Impact

92 % detection accuracy and 0.028 average pose error on unseen RGB-D datasets.

0.401 robustness score under noisy and partially occluded conditions.

Successful closed-loop grasp validation in ABB robot simulation, demonstrating feasibility for autonomous mining automation.

Learnings & Limitations

Pose stability is sensitive to severe occlusion and depth noise in single-frame perception.

Temporal fusion or multi-view consistency would significantly improve robustness.

Active perception strategies could reduce ambiguity during grasp planning.

Pose-Estimate Workflow

(a) segmented Mask by Mask RCNN; (b) point cloud generation; (c) pose-estimation with colour code (white- centroid, green- bolt’s end points, yellow- camera centre)

Multi-Stage Perception-to-control Pipeline

Full demonstration of End-to-end perception workflow showing detection of single and multiple bolts, partial occlusions, and rock coverage.